I really enjoyed a recent paper by Nathan Hodas and Kristina Lerman called The Simple Rules of Social Contagion. It resonates strikingly with my own work. They start by asking themselves why is it that “social contagion” (the spreading of memes) does not behave like contagion proper as described by SIR models – in the sense that, for a given network of interactions, social contagion spreads slower than and not as far as actual epidemics. The way they answer this question is really nice, as are they results.

Their results is the following: social contagion effects can be broken down into two components. One is indeed a simple SIR-style epidemic model; the other is a dampening factors that takes into account the cognitive limits of highly connected individuals. The idea here is that catching the flu does not require any expenditure of energy, whereas resharing something on the web does: you had to devote some attention to it before you could make the decision it was worth resharing. The critical point here is this: highly connected individuals (network hubs) are exposed to more information than less connected ones, because their richer web of relationships entails more exposure. Therefore, they end up with a higher attention threshold. So, in contagion proper wherever the infection hits a network hub diffusion skyrockets: hubs unambiguously help the infection spread. In social contagion on hitting a hub diffusion can still skyrocket if the meme makes it past the hub’s attention threshold, but it can also decrease if it does not. Hubs are both enhancers (via connectivity) and dampeners (via attention deficit) of contagion. This way of looking at things resonates with economists: their models work well only where there is a scarce resource (attention).

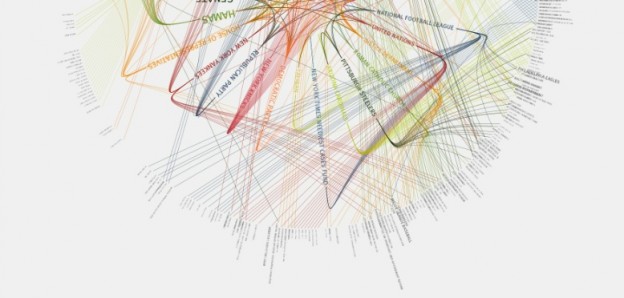

Their method is also sweet. They consider two social networks, Twitter and Digg. For each they build an exposure response function, which maps the probability of users exposed to a certain URL to retweet it (Twitter) or vote it (Digg). This function is in turn broken into two components: the visibility of incoming messages (exposures) and a social enhancement factor – if you know that your friends are spreading a certain content, you might be more likely to spread it yourself. So, the paper tracks down the visibility of each exposure through a time response function (probability that a user retweets or votes a URL as a function of the time elapsed since exposure and their number of friends). At the highest level, this is modeled as a multiplication: the probability of becoming infected by the meme for a in individual with ![]() friends after

friends after ![]() exposures is the product of the social enhancement factor times the probability of finding

exposures is the product of the social enhancement factor times the probability of finding ![]() of the

of the ![]() exposure occurring during the time interval considered.

exposure occurring during the time interval considered.

At this point, the authors do something neat: they model the precise form of the user response function based on the specific characteristics of the user interfaces of, respectively, Twitter and Digg. For example, in Twitter, they reason, the user is going to scan the screen top to bottom. Her probability of becoming infected by one tweet can be reasonably assumed to be independent of her probability of becoming infected by any other tweet. Suppose the same URL is exposed twice in the user’s feed (which would mean two of the people she follows have retweeted the same URL): then, the overall probability of the user not to become infected is given by the probability of not becoming infected by the first of the tweets times that of not becoming infected by the second tweet. For Digg, they model explicitly the social signal given by “a badge next to the URL that shows the number of friends who voted for the URL”. So, they are accounting for design choices in social software to model how information spreads across it – something I have myself been going on about for a few years now.

This kind of research can be elusive: for example, Twitter is at core a set of APIs that can be queried in a zillion different ways. Accounting for the user interfaces of the different apps people use to look at the Twitter stream can be challenging: the paper itself at some point mentions that “the Twitter user interface offered no explicit social feedback”, and that is not quite the way I perceive it. But never mind that: the route is traced. If you can quantify the effects of user interfaces on the spreading of information in networks, you can also design for the desired effects in a rigorous way. The implication for those of us who care about collective intelligence are straightforward: important conversations should be moved online, where stewardship is easy(-ier) and cheap (-er).

Noted: there are some brackets missing from equation (2).