I am just back from Dublin. I was at Policy Making 2.0, a meetup of people who care about public policies, and try to apply to them advanced modelling techniques and lots of computation. Big data, network analysis, sentiment analysis: the whole package. What results, if any, are we getting? What problems are blocking our way? What technology do we need to make progress? Lots of notes to compare. Thanks should be given (again!) to David Osimo, the main hub of this small community, for organizing the conference and bringing us together.

At the end of it all, I have good news, bad news and excellent news.

Good news: we are starting to see modeling that actually works, in the sense of making a real contribution to understanding intricate problems. A nice example is a href=”http://www.gleamviz.org/”>GLEAM, that allows to simulate epidemics. What’s interesting is that it uses real-world data, both demographic (population and its spatial distribution) and on transportation networks (infection agents travel with the people infected, by plane or by train). To these, you add the data describing the epidemics you are trying to simulate: how infectious is it? How serious? Where does the first outbreak start? And so on. The modeler, then, patches it all together into a simulation scenario.

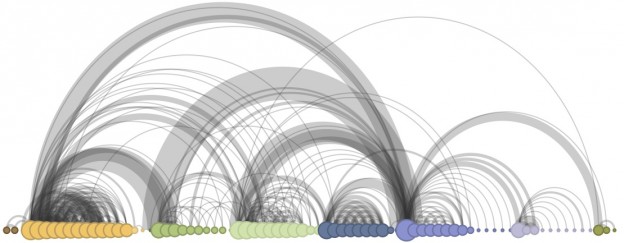

Bad news: making rigorous AND legible models is very hard – no wonder we normally can’t. The rigorous ones fully take on board the complexity of the phenomena they attempt to describe, with the result that often they cannot really give a simple answer beyond “it depends”; the legible ones (in the sense that their results are easy to understand, and often based on shiny visualizations) pay for such surface visibility by sweeping under the carpet the understanding of how they get to those results – at least as far as most citizens and decision makers are concerned. This problem is further complicated when Big Data come into play, because Big Data force us to rethink what we mean by “evidence” (this argument deserves its own post, so I will not make it here).

Excellent news: the community of researchers and policy makers seem to be converging on what follows. Public policies will make the real leap into the future when they are able to devolve power and leadership to an ever smarter and better informed citizenry. That is, if they will be transparent, participatory, enabling, humble. Technology is ok: we need it. But without a deep fix in the way we think and run policy, future public institutions risk looking much like the Habsburgers Empire’s Cadastral Service, circa 1840 (rigid hierarchies, tight formal rules, bad exceptions management, airtight separation between administrations and civil society, communication with citizens only through regulation…), only with computers and perhaps infographics. Over coffee breaks, we mused a lot about iatrogenics (public policies that, though well-meaning, end up doing harm for lack of the intellectual humility to leave alone a complex system that is not properly understood); transparency as a trust generator, as well as a goal in itself; and we phantasized about public-private partnerships to troubleshoot policy when the normal mode of operating mode fails, a sort of commandos of social innovators and civic hackers. This would be my dream job! The Dutch Kakfa Brigades gave it a try, but based on the website the project does not seem very active.

The community has spoken. We’ll see if the Commission and the national policy makers will pick up on this consensus, and how. Of course, reform that goes so deep is really hard, and does not depend on the goodwill of the individual decision makers. The wisest thing we can do, maybe, is push the edge a little further out, without too many expectations. But without giving up, either. Because – and today I am a little more optimistic – we have not quite lost this one yet.

Great Summary, Alberto! I, too, thoroughly enjoyed the conference and really appreciate you sharing this post. One other comment I would make is the huge challenge to make the Policy Making 2.0 Tools MULTILINGUAL! When we’re talking about Big Data, there is virtually no platform (not even Hadoop) that can manage it seamlessly (but maybe you can make my day with some more “excellent news”…if you know of one!) And, on the topic of sentiment analysis, opinion mining, semantic web, etc. there are some advancements in effective multilingual algorythms, but, again, not completely seamless. I will DM you more about this. Thanks, again! P.S. Which Italian paper published it?

Megan Browne

EU Community Director

EurActiv